This is the multi-page printable view of this section. Click here to print.

Getting Started

- 1: Introduction

- 2: Use Cases

- 3: Quick Start

- 4: Ecosystem

- 5: Upgrading

- 6: KRaft vs ZooKeeper

- 7: Compatibility

- 8: Docker

1 - Introduction

What is event streaming?

Event streaming is the digital equivalent of the human body’s central nervous system. It is the technological foundation for the ‘always-on’ world where businesses are increasingly software-defined and automated, and where the user of software is more software.

Technically speaking, event streaming is the practice of capturing data in real-time from event sources like databases, sensors, mobile devices, cloud services, and software applications in the form of streams of events; storing these event streams durably for later retrieval; manipulating, processing, and reacting to the event streams in real-time as well as retrospectively; and routing the event streams to different destination technologies as needed. Event streaming thus ensures a continuous flow and interpretation of data so that the right information is at the right place, at the right time.

What can I use event streaming for?

Event streaming is applied to a wide variety of use cases across a plethora of industries and organizations. Its many examples include:

- To process payments and financial transactions in real-time, such as in stock exchanges, banks, and insurances.

- To track and monitor cars, trucks, fleets, and shipments in real-time, such as in logistics and the automotive industry.

- To continuously capture and analyze sensor data from IoT devices or other equipment, such as in factories and wind parks.

- To collect and immediately react to customer interactions and orders, such as in retail, the hotel and travel industry, and mobile applications.

- To monitor patients in hospital care and predict changes in condition to ensure timely treatment in emergencies.

- To connect, store, and make available data produced by different divisions of a company.

- To serve as the foundation for data platforms, event-driven architectures, and microservices.

Apache Kafka® is an event streaming platform. What does that mean?

Kafka combines three key capabilities so you can implement your use cases for event streaming end-to-end with a single battle-tested solution:

- To publish (write) and subscribe to (read) streams of events, including continuous import/export of your data from other systems.

- To store streams of events durably and reliably for as long as you want.

- To process streams of events as they occur or retrospectively.

And all this functionality is provided in a distributed, highly scalable, elastic, fault-tolerant, and secure manner. Kafka can be deployed on bare-metal hardware, virtual machines, and containers, and on-premises as well as in the cloud. You can choose between self-managing your Kafka environments and using fully managed services offered by a variety of vendors.

How does Kafka work in a nutshell?

Kafka is a distributed system consisting of servers and clients that communicate via a high-performance TCP network protocol. It can be deployed on bare-metal hardware, virtual machines, and containers in on-premise as well as cloud environments.

Servers : Kafka is run as a cluster of one or more servers that can span multiple datacenters or cloud regions. Some of these servers form the storage layer, called the brokers. Other servers run Kafka Connect to continuously import and export data as event streams to integrate Kafka with your existing systems such as relational databases as well as other Kafka clusters. To let you implement mission-critical use cases, a Kafka cluster is highly scalable and fault-tolerant: if any of its servers fails, the other servers will take over their work to ensure continuous operations without any data loss.

Clients : They allow you to write distributed applications and microservices that read, write, and process streams of events in parallel, at scale, and in a fault-tolerant manner even in the case of network problems or machine failures. Kafka ships with some such clients included, which are augmented by dozens of clients provided by the Kafka community: clients are available for Java and Scala including the higher-level Kafka Streams library, for Go, Python, C/C++, and many other programming languages as well as REST APIs.

Main Concepts and Terminology

An event records the fact that “something happened” in the world or in your business. It is also called record or message in the documentation. When you read or write data to Kafka, you do this in the form of events. Conceptually, an event has a key, value, timestamp, and optional metadata headers. Here’s an example event:

- Event key: “Alice”

- Event value: “Made a payment of $200 to Bob”

- Event timestamp: “Jun. 25, 2020 at 2:06 p.m.”

Producers are those client applications that publish (write) events to Kafka, and consumers are those that subscribe to (read and process) these events. In Kafka, producers and consumers are fully decoupled and agnostic of each other, which is a key design element to achieve the high scalability that Kafka is known for. For example, producers never need to wait for consumers. Kafka provides various guarantees such as the ability to process events exactly-once.

Events are organized and durably stored in topics. Very simplified, a topic is similar to a folder in a filesystem, and the events are the files in that folder. An example topic name could be “payments”. Topics in Kafka are always multi-producer and multi-subscriber: a topic can have zero, one, or many producers that write events to it, as well as zero, one, or many consumers that subscribe to these events. Events in a topic can be read as often as needed—unlike traditional messaging systems, events are not deleted after consumption. Instead, you define for how long Kafka should retain your events through a per-topic configuration setting, after which old events will be discarded. Kafka’s performance is effectively constant with respect to data size, so storing data for a long time is perfectly fine.

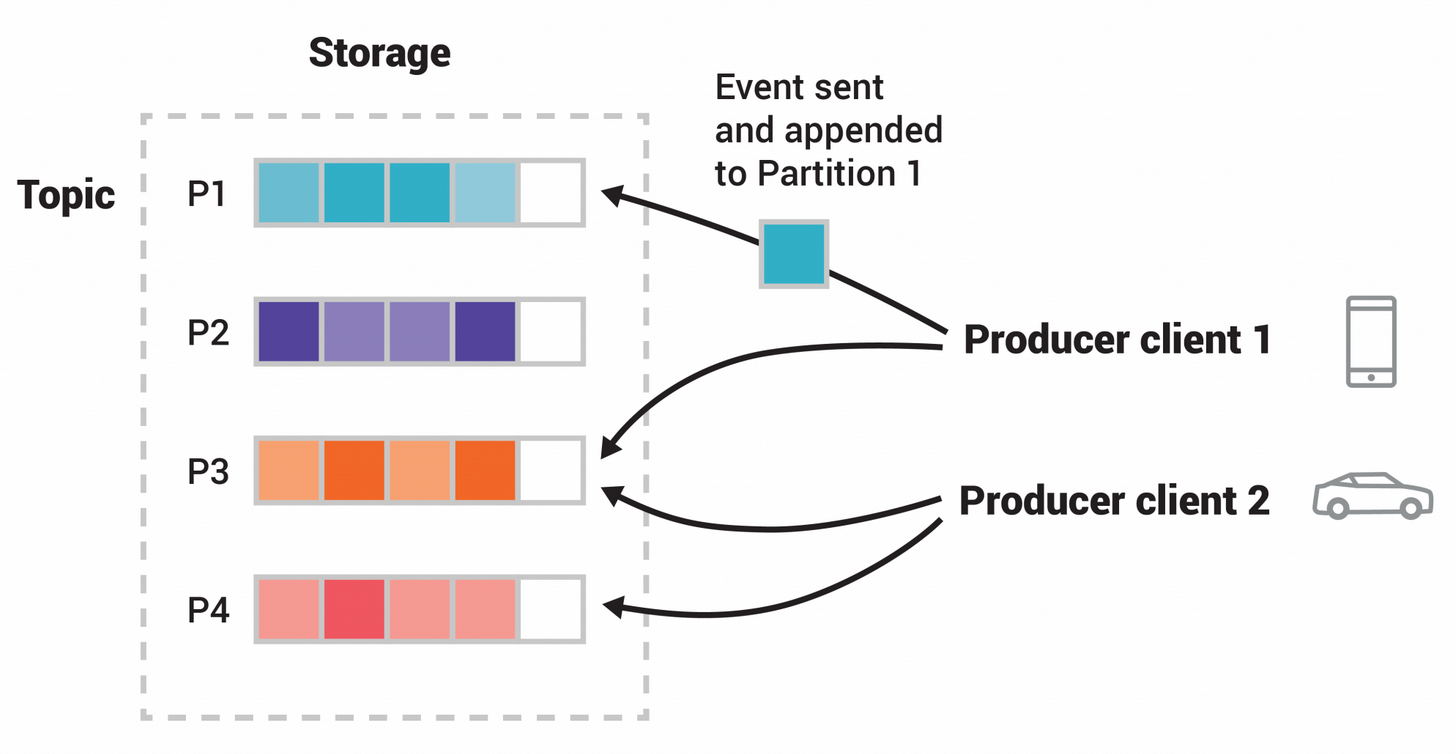

Topics are partitioned , meaning a topic is spread over a number of “buckets” located on different Kafka brokers. This distributed placement of your data is very important for scalability because it allows client applications to both read and write the data from/to many brokers at the same time. When a new event is published to a topic, it is actually appended to one of the topic’s partitions. Events with the same event key (e.g., a customer or vehicle ID) are written to the same partition, and Kafka guarantees that any consumer of a given topic-partition will always read that partition’s events in exactly the same order as they were written.

Figure: This example topic has four partitions P1–P4. Two different producer clients are publishing, independently from each other, new events to the topic by writing events over the network to the topic’s partitions. Events with the same key (denoted by their color in the figure) are written to the same partition. Note that both producers can write to the same partition if appropriate.

Figure: This example topic has four partitions P1–P4. Two different producer clients are publishing, independently from each other, new events to the topic by writing events over the network to the topic’s partitions. Events with the same key (denoted by their color in the figure) are written to the same partition. Note that both producers can write to the same partition if appropriate.

To make your data fault-tolerant and highly-available, every topic can be replicated , even across geo-regions or datacenters, so that there are always multiple brokers that have a copy of the data just in case things go wrong, you want to do maintenance on the brokers, and so on. A common production setting is a replication factor of 3, i.e., there will always be three copies of your data. This replication is performed at the level of topic-partitions.

This primer should be sufficient for an introduction. The Design section of the documentation explains Kafka’s various concepts in full detail, if you are interested.

Kafka APIs

In addition to command line tooling for management and administration tasks, Kafka has five core APIs for Java and Scala:

- The Admin API to manage and inspect topics, brokers, and other Kafka objects.

- The Producer API to publish (write) a stream of events to one or more Kafka topics.

- The Consumer API to subscribe to (read) one or more topics and to process the stream of events produced to them.

- The Kafka Streams API to implement stream processing applications and microservices. It provides higher-level functions to process event streams, including transformations, stateful operations like aggregations and joins, windowing, processing based on event-time, and more. Input is read from one or more topics in order to generate output to one or more topics, effectively transforming the input streams to output streams.

- The Kafka Connect API to build and run reusable data import/export connectors that consume (read) or produce (write) streams of events from and to external systems and applications so they can integrate with Kafka. For example, a connector to a relational database like PostgreSQL might capture every change to a set of tables. However, in practice, you typically don’t need to implement your own connectors because the Kafka community already provides hundreds of ready-to-use connectors.

Where to go from here

- To get hands-on experience with Kafka, follow the Quickstart.

- To understand Kafka in more detail, read the Documentation. You also have your choice of Kafka books and academic papers.

- Browse through the Use Cases to learn how other users in our world-wide community are getting value out of Kafka.

- Join a local Kafka meetup group and watch talks from Kafka Summit, the main conference of the Kafka community.

2 - Use Cases

Here is a description of a few of the popular use cases for Apache Kafka®. For an overview of a number of these areas in action, see this blog post.

Messaging

Kafka works well as a replacement for a more traditional message broker. Message brokers are used for a variety of reasons (to decouple processing from data producers, to buffer unprocessed messages, etc). In comparison to most messaging systems Kafka has better throughput, built-in partitioning, replication, and fault-tolerance which makes it a good solution for large scale message processing applications.

In our experience messaging uses are often comparatively low-throughput, but may require low end-to-end latency and often depend on the strong durability guarantees Kafka provides.

In this domain Kafka is comparable to traditional messaging systems such as ActiveMQ or RabbitMQ.

Website Activity Tracking

The original use case for Kafka was to be able to rebuild a user activity tracking pipeline as a set of real-time publish-subscribe feeds. This means site activity (page views, searches, or other actions users may take) is published to central topics with one topic per activity type. These feeds are available for subscription for a range of use cases including real-time processing, real-time monitoring, and loading into Hadoop or offline data warehousing systems for offline processing and reporting.

Activity tracking is often very high volume as many activity messages are generated for each user page view.

Metrics

Kafka is often used for operational monitoring data. This involves aggregating statistics from distributed applications to produce centralized feeds of operational data.

Log Aggregation

Many people use Kafka as a replacement for a log aggregation solution. Log aggregation typically collects physical log files off servers and puts them in a central place (a file server or HDFS perhaps) for processing. Kafka abstracts away the details of files and gives a cleaner abstraction of log or event data as a stream of messages. This allows for lower-latency processing and easier support for multiple data sources and distributed data consumption. In comparison to log-centric systems like Scribe or Flume, Kafka offers equally good performance, stronger durability guarantees due to replication, and much lower end-to-end latency.

Stream Processing

Many users of Kafka process data in processing pipelines consisting of multiple stages, where raw input data is consumed from Kafka topics and then aggregated, enriched, or otherwise transformed into new topics for further consumption or follow-up processing. For example, a processing pipeline for recommending news articles might crawl article content from RSS feeds and publish it to an “articles” topic; further processing might normalize or deduplicate this content and publish the cleansed article content to a new topic; a final processing stage might attempt to recommend this content to users. Such processing pipelines create graphs of real-time data flows based on the individual topics. Starting in 0.10.0.0, a light-weight but powerful stream processing library called Kafka Streams is available in Apache Kafka to perform such data processing as described above. Apart from Kafka Streams, alternative open source stream processing tools include Apache Storm and Apache Samza.

Event Sourcing

Event sourcing is a style of application design where state changes are logged as a time-ordered sequence of records. Kafka’s support for very large stored log data makes it an excellent backend for an application built in this style.

Commit Log

Kafka can serve as a kind of external commit-log for a distributed system. The log helps replicate data between nodes and acts as a re-syncing mechanism for failed nodes to restore their data. The log compaction feature in Kafka helps support this usage. In this usage Kafka is similar to Apache BookKeeper project.

3 - Quick Start

Step 1: Get Kafka

Download the latest Kafka release and extract it:

$ tar -xzf kafka_2.13-4.0.0.tgz

$ cd kafka_2.13-4.0.0

Step 2: Start the Kafka environment

NOTE: Your local environment must have Java 17+ installed.

Kafka can be run using local scripts and downloaded files or the docker image.

Using downloaded files

Generate a Cluster UUID

$ KAFKA_CLUSTER_ID="$(bin/kafka-storage.sh random-uuid)"

Format Log Directories

$ bin/kafka-storage.sh format --standalone -t $KAFKA_CLUSTER_ID -c config/server.properties

Start the Kafka Server

$ bin/kafka-server-start.sh config/server.properties

Once the Kafka server has successfully launched, you will have a basic Kafka environment running and ready to use.

Using JVM Based Apache Kafka Docker Image

Get the Docker image:

$ docker pull apache/kafka:4.0.0

Start the Kafka Docker container:

$ docker run -p 9092:9092 apache/kafka:4.0.0

Using GraalVM Based Native Apache Kafka Docker Image

Get the Docker image:

$ docker pull apache/kafka-native:4.0.0

Start the Kafka Docker container:

$ docker run -p 9092:9092 apache/kafka-native:4.0.0

Step 3: Create a topic to store your events

Kafka is a distributed event streaming platform that lets you read, write, store, and process events (also called records or messages in the documentation) across many machines.

Example events are payment transactions, geolocation updates from mobile phones, shipping orders, sensor measurements from IoT devices or medical equipment, and much more. These events are organized and stored in topics. Very simplified, a topic is similar to a folder in a filesystem, and the events are the files in that folder.

So before you can write your first events, you must create a topic. Open another terminal session and run:

$ bin/kafka-topics.sh --create --topic quickstart-events --bootstrap-server localhost:9092

All of Kafka’s command line tools have additional options: run the kafka-topics.sh command without any arguments to display usage information. For example, it can also show you details such as the partition count of the new topic:

$ bin/kafka-topics.sh --describe --topic quickstart-events --bootstrap-server localhost:9092

Topic: quickstart-events TopicId: NPmZHyhbR9y00wMglMH2sg PartitionCount: 1 ReplicationFactor: 1 Configs:

Topic: quickstart-events Partition: 0 Leader: 0 Replicas: 0 Isr: 0

Step 4: Write some events into the topic

A Kafka client communicates with the Kafka brokers via the network for writing (or reading) events. Once received, the brokers will store the events in a durable and fault-tolerant manner for as long as you need—even forever.

Run the console producer client to write a few events into your topic. By default, each line you enter will result in a separate event being written to the topic.

$ bin/kafka-console-producer.sh --topic quickstart-events --bootstrap-server localhost:9092

>This is my first event

>This is my second event

You can stop the producer client with Ctrl-C at any time.

Step 5: Read the events

Open another terminal session and run the console consumer client to read the events you just created:

$ bin/kafka-console-consumer.sh --topic quickstart-events --from-beginning --bootstrap-server localhost:9092

This is my first event

This is my second event

You can stop the consumer client with Ctrl-C at any time.

Feel free to experiment: for example, switch back to your producer terminal (previous step) to write additional events, and see how the events immediately show up in your consumer terminal.

Because events are durably stored in Kafka, they can be read as many times and by as many consumers as you want. You can easily verify this by opening yet another terminal session and re-running the previous command again.

Step 6: Import/export your data as streams of events with Kafka Connect

You probably have lots of data in existing systems like relational databases or traditional messaging systems, along with many applications that already use these systems. Kafka Connect allows you to continuously ingest data from external systems into Kafka, and vice versa. It is an extensible tool that runs connectors , which implement the custom logic for interacting with an external system. It is thus very easy to integrate existing systems with Kafka. To make this process even easier, there are hundreds of such connectors readily available.

In this quickstart we’ll see how to run Kafka Connect with simple connectors that import data from a file to a Kafka topic and export data from a Kafka topic to a file.

First, make sure to add connect-file-4.0.0.jar to the plugin.path property in the Connect worker’s configuration. For the purpose of this quickstart we’ll use a relative path and consider the connectors’ package as an uber jar, which works when the quickstart commands are run from the installation directory. However, it’s worth noting that for production deployments using absolute paths is always preferable. See plugin.path for a detailed description of how to set this config.

Edit the config/connect-standalone.properties file, add or change the plugin.path configuration property match the following, and save the file:

$ echo "plugin.path=libs/connect-file-4.0.0.jar" >> config/connect-standalone.properties

Then, start by creating some seed data to test with:

$ echo -e "foo

bar" > test.txt

Or on Windows:

$ echo foo > test.txt

$ echo bar >> test.txt

Next, we’ll start two connectors running in standalone mode, which means they run in a single, local, dedicated process. We provide three configuration files as parameters. The first is always the configuration for the Kafka Connect process, containing common configuration such as the Kafka brokers to connect to and the serialization format for data. The remaining configuration files each specify a connector to create. These files include a unique connector name, the connector class to instantiate, and any other configuration required by the connector.

$ bin/connect-standalone.sh config/connect-standalone.properties config/connect-file-source.properties config/connect-file-sink.properties

These sample configuration files, included with Kafka, use the default local cluster configuration you started earlier and create two connectors: the first is a source connector that reads lines from an input file and produces each to a Kafka topic and the second is a sink connector that reads messages from a Kafka topic and produces each as a line in an output file.

During startup you’ll see a number of log messages, including some indicating that the connectors are being instantiated. Once the Kafka Connect process has started, the source connector should start reading lines from test.txt and producing them to the topic connect-test, and the sink connector should start reading messages from the topic connect-test and write them to the file test.sink.txt. We can verify the data has been delivered through the entire pipeline by examining the contents of the output file:

$ more test.sink.txt

foo

bar

Note that the data is being stored in the Kafka topic connect-test, so we can also run a console consumer to see the data in the topic (or use custom consumer code to process it):

$ bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic connect-test --from-beginning

{"schema":{"type":"string","optional":false},"payload":"foo"}

{"schema":{"type":"string","optional":false},"payload":"bar"}

…

The connectors continue to process data, so we can add data to the file and see it move through the pipeline:

$ echo "Another line" >> test.txt

You should see the line appear in the console consumer output and in the sink file.

Step 7: Process your events with Kafka Streams

Once your data is stored in Kafka as events, you can process the data with the Kafka Streams client library for Java/Scala. It allows you to implement mission-critical real-time applications and microservices, where the input and/or output data is stored in Kafka topics. Kafka Streams combines the simplicity of writing and deploying standard Java and Scala applications on the client side with the benefits of Kafka’s server-side cluster technology to make these applications highly scalable, elastic, fault-tolerant, and distributed. The library supports exactly-once processing, stateful operations and aggregations, windowing, joins, processing based on event-time, and much more.

To give you a first taste, here’s how one would implement the popular WordCount algorithm:

KStream<String, String> textLines = builder.stream("quickstart-events");

KTable<String, Long> wordCounts = textLines

.flatMapValues(line -> Arrays.asList(line.toLowerCase().split(" ")))

.groupBy((keyIgnored, word) -> word)

.count();

wordCounts.toStream().to("output-topic", Produced.with(Serdes.String(), Serdes.Long()));

The Kafka Streams demo and the app development tutorial demonstrate how to code and run such a streaming application from start to finish.

Step 8: Terminate the Kafka environment

Now that you reached the end of the quickstart, feel free to tear down the Kafka environment—or continue playing around.

- Stop the producer and consumer clients with

Ctrl-C, if you haven’t done so already. - Stop the Kafka broker with

Ctrl-C.

If you also want to delete any data of your local Kafka environment including any events you have created along the way, run the command:

$ rm -rf /tmp/kafka-logs /tmp/kraft-combined-logs

Congratulations!

You have successfully finished the Apache Kafka quickstart.

To learn more, we suggest the following next steps:

- Read through the brief Introduction to learn how Kafka works at a high level, its main concepts, and how it compares to other technologies. To understand Kafka in more detail, head over to the Documentation.

- Browse through the Use Cases to learn how other users in our world-wide community are getting value out of Kafka.

- Join a local Kafka meetup group and watch talks from Kafka Summit, the main conference of the Kafka community.

4 - Ecosystem

There are a plethora of tools that integrate with Kafka outside the main distribution. The ecosystem page lists many of these, including stream processing systems, Hadoop integration, monitoring, and deployment tools.

5 - Upgrading

Upgrading to 4.0.0

Upgrading Clients to 4.0.0

For a rolling upgrade:

- Upgrade the clients one at a time: shut down the client, update the code, and restart it.

- Clients (including Streams and Connect) must be on version 2.1 or higher before upgrading to 4.0. Many deprecated APIs were removed in Kafka 4.0. For more information about the compatibility, please refer to the compatibility matrix or KIP-1124.

Upgrading Servers to 4.0.0 from any version 3.3.x through 3.9.x

Note: Apache Kafka 4.0 only supports KRaft mode - ZooKeeper mode has been removed. As such, broker upgrades to 4.0.0 (and higher) require KRaft mode and the software and metadata versions must be at least 3.3.x (the first version when KRaft mode was deemed production ready). For clusters in KRaft mode with versions older than 3.3.x, we recommend upgrading to 3.9.x before upgrading to 4.0.x. Clusters in ZooKeeper mode have to be migrated to KRaft mode before they can be upgraded to 4.0.x.

For a rolling upgrade:

- Upgrade the brokers one at a time: shut down the broker, update the code, and restart it. Once you have done so, the brokers will be running the latest version and you can verify that the cluster’s behavior and performance meets expectations.

- Once the cluster’s behavior and performance has been verified, finalize the upgrade by running

bin/kafka-features.sh --bootstrap-server localhost:9092 upgrade --release-version 4.0 - Note that cluster metadata downgrade is not supported in this version since it has metadata changes. Every MetadataVersion has a boolean parameter that indicates if there are metadata changes (i.e.

IBP_4_0_IV1(23, "4.0", "IV1", true)means this version has metadata changes). Given your current and target versions, a downgrade is only possible if there are no metadata changes in the versions between.

Notable changes in 4.0.0

- Old protocol API versions have been removed. Users should ensure brokers are version 2.1 or higher before upgrading Java clients (including Connect and Kafka Streams which use the clients internally) to 4.0. Similarly, users should ensure their Java clients (including Connect and Kafka Streams) version is 2.1 or higher before upgrading brokers to 4.0. Finally, care also needs to be taken when it comes to kafka clients that are not part of Apache Kafka, please see KIP-896 for the details.

- Apache Kafka 4.0 only supports KRaft mode - ZooKeeper mode has been removed. About version upgrade, check Upgrading to 4.0.0 from any version 3.3.x through 3.9.x for more info.

- Apache Kafka 4.0 ships with a brand-new group coordinator implementation (See here). Functionally speaking, it implements all the same APIs. There are reasonable defaults, but the behavior of the new group coordinator can be tuned by setting the configurations with prefix

group.coordinator. - The Next Generation of the Consumer Rebalance Protocol (KIP-848) is now Generally Available (GA) in Apache Kafka 4.0. The protocol is automatically enabled on the server when the upgrade to 4.0 is finalized. Note that once the new protocol is used by consumer groups, the cluster can only downgrade to version 3.4.1 or newer. Check here for details.

- Transactions Server Side Defense (KIP-890) brings a strengthened transactional protocol to Apache Kafka 4.0. The new and improved transactional protocol is enabled when the upgrade to 4.0 is finalized. When using 4.0 producer clients, the producer epoch is bumped on every transaction to ensure every transaction includes the intended messages and duplicates are not written as part of the next transaction. Downgrading the protocol is safe. For more information check here

- Eligible Leader Replicas (KIP-966 Part 1) enhances the replication protocol for the Apache Kafka 4.0. Now the KRaft controller keeps track of the data partition replicas that are not included in ISR but are safe to be elected as leader without data loss. Such replicas are stored in the partition metadata as the

Eligible Leader Replicas(ELR). For more information check here - Since Apache Kafka 4.0.0, we have added a system property (“org.apache.kafka.sasl.oauthbearer.allowed.urls”) to set the allowed URLs as SASL OAUTHBEARER token or jwks endpoints. By default, the value is an empty list. Users should explicitly set the allowed list if necessary.

- A number of deprecated classes, methods, configurations and tools have been removed.

- Common

- The

metrics.jmx.blacklistandmetrics.jmx.whitelistconfigurations were removed from theorg.apache.kafka.common.metrics.JmxReporterPlease usemetrics.jmx.excludeandmetrics.jmx.includerespectively instead. - The

auto.include.jmx.reporterconfiguration was removed. Themetric.reportersconfiguration is now set toorg.apache.kafka.common.metrics.JmxReporterby default. - The constructor

org.apache.kafka.common.metrics.JmxReporterwith string argument was removed. See KIP-606 for details. - The

bufferpool-wait-time-total,io-waittime-total, andiotime-totalmetrics were removed. Please usebufferpool-wait-time-ns-total,io-wait-time-ns-total, andio-time-ns-totalmetrics as replacements, respectively. - The

kafka.common.requests.DescribeLogDirsResponse.LogDirInfoclass was removed. Please use thekafka.clients.admin.DescribeLogDirsResult.descriptions()class andkafka.clients.admin.DescribeLogDirsResult.allDescriptions()instead. - The

kafka.common.requests.DescribeLogDirsResponse.ReplicaInfoclass was removed. Please use thekafka.clients.admin.DescribeLogDirsResult.descriptions()class andkafka.clients.admin.DescribeLogDirsResult.allDescriptions()instead. - The

org.apache.kafka.common.security.oauthbearer.secured.OAuthBearerLoginCallbackHandlerclass was removed. Please use theorg.apache.kafka.common.security.oauthbearer.OAuthBearerLoginCallbackHandlerclass instead. - The

org.apache.kafka.common.security.oauthbearer.secured.OAuthBearerValidatorCallbackHandlerclass was removed. Please use theorg.apache.kafka.common.security.oauthbearer.OAuthBearerValidatorCallbackHandlerclass instead. - The

org.apache.kafka.common.errors.NotLeaderForPartitionExceptionclass was removed. Theorg.apache.kafka.common.errors.NotLeaderOrFollowerExceptionis returned if a request could not be processed because the broker is not the leader or follower for a topic partition. - The

org.apache.kafka.clients.producer.internals.DefaultPartitionerandorg.apache.kafka.clients.producer.UniformStickyPartitionerclass was removed. - The

log.message.format.versionandmessage.format.versionconfigs were removed. - The function

onNewBatchinorg.apache.kafka.clients.producer.Partitionerclass was removed. - The default properties files for KRaft mode are no longer stored in the separate

config/kraftdirectory since Zookeeper has been removed. These files have been consolidated with other configuration files. Now all configuration files are inconfigdirectory. - The valid format for

--bootstrap-serveronly supports comma-separated value, such ashost1:port1,host2:port2,.... Providing other formats, like space-separated bootstrap servers (e.g.,host1:port1 host2:port2 host3:port3), will result in an exception, even though this was allowed in Apache Kafka versions prior to 4.0.

- The

- Broker

- The

delegation.token.master.keyconfiguration was removed. Please usedelegation.token.secret.keyinstead. - The

offsets.commit.required.acksconfiguration was removed. See KIP-1041 for details. - The

log.message.timestamp.difference.max.msconfiguration was removed. Please uselog.message.timestamp.before.max.msandlog.message.timestamp.after.max.msinstead. See KIP-937 for details. - The

remote.log.manager.copier.thread.pool.sizeconfiguration default value was changed to 10 from -1. Values of -1 are no longer valid. A minimum of 1 or higher is valid. See KIP-1030 - The

remote.log.manager.expiration.thread.pool.sizeconfiguration default value was changed to 10 from -1. Values of -1 are no longer valid. A minimum of 1 or higher is valid. See KIP-1030 - The

remote.log.manager.thread.pool.sizeconfiguration default value was changed to 2 from 10. See KIP-1030 - The minimum

segment.bytes/log.segment.byteshas changed from 14 bytes to 1MB. See KIP-1030

- The

- MirrorMaker

- The original MirrorMaker (MM1) and related classes were removed. Please use the Connect-based MirrorMaker (MM2), as described in the Geo-Replication section..

- The

use.incremental.alter.configsconfiguration was removed fromMirrorSourceConnector. The modified behavior is identical to the previousrequiredconfiguration, therefore users should ensure that brokers in the target cluster are at least running 2.3.0. - The

add.source.alias.to.metricsconfiguration was removed fromMirrorSourceConnector. The source cluster alias is now always added to the metrics. - The

config.properties.blacklistwas removed from theorg.apache.kafka.connect.mirror.MirrorSourceConfigPlease useconfig.properties.excludeinstead. - The

topics.blacklistwas removed from theorg.apache.kafka.connect.mirror.MirrorSourceConfigPlease usetopics.excludeinstead. - The

groups.blacklistwas removed from theorg.apache.kafka.connect.mirror.MirrorSourceConfigPlease usegroups.excludeinstead.

- Tools

- The

kafka.common.MessageReaderclass was removed. Please use theorg.apache.kafka.tools.api.RecordReaderinterface to build custom readers for thekafka-console-producertool. - The

kafka.tools.DefaultMessageFormatterclass was removed. Please use theorg.apache.kafka.tools.consumer.DefaultMessageFormatterclass instead. - The

kafka.tools.LoggingMessageFormatterclass was removed. Please use theorg.apache.kafka.tools.consumer.LoggingMessageFormatterclass instead. - The

kafka.tools.NoOpMessageFormatterclass was removed. Please use theorg.apache.kafka.tools.consumer.NoOpMessageFormatterclass instead. - The

--whitelistoption was removed from thekafka-console-consumercommand line tool. Please use--includeinstead. - Redirections from the old tools packages have been removed:

kafka.admin.FeatureCommand,kafka.tools.ClusterTool,kafka.tools.EndToEndLatency,kafka.tools.StateChangeLogMerger,kafka.tools.StreamsResetter,kafka.tools.JmxTool. - The

--authorizer,--authorizer-properties, and--zk-tls-config-fileoptions were removed from thekafka-aclscommand line tool. Please use--bootstrap-serveror--bootstrap-controllerinstead. - The

kafka.serializer.Decodertrait was removed, please use theorg.apache.kafka.tools.api.Decoderinterface to build custom decoders for thekafka-dump-logtool. - The

kafka.coordinator.group.OffsetsMessageFormatterclass was removed. Please use theorg.apache.kafka.tools.consumer.OffsetsMessageFormatterclass instead. - The

kafka.coordinator.group.GroupMetadataMessageFormatterclass was removed. Please use theorg.apache.kafka.tools.consumer.GroupMetadataMessageFormatterclass instead. - The

kafka.coordinator.transaction.TransactionLogMessageFormatterclass was removed. Please use theorg.apache.kafka.tools.consumer.TransactionLogMessageFormatterclass instead. - The

--topic-white-listoption was removed from thekafka-replica-verificationcommand line tool. Please use--topics-includeinstead. - The

--broker-listoption was removed from thekafka-verifiable-consumercommand line tool. Please use--bootstrap-serverinstead. - kafka-configs.sh now uses incrementalAlterConfigs API to alter broker configurations instead of the deprecated alterConfigs API, and it will fall directly if the broker doesn’t support incrementalAlterConfigs API, which means the broker version is prior to 2.3.x. See KIP-1011 for more details.

- The

kafka.admin.ZkSecurityMigratortool was removed.

- The

- Connect

- The

whitelistandblacklistconfigurations were removed from theorg.apache.kafka.connect.transforms.ReplaceFieldtransformation. Please useincludeandexcluderespectively instead. - The

onPartitionsRevoked(Collection<TopicPartition>)andonPartitionsAssigned(Collection<TopicPartition>)methods were removed fromSinkTask. - The

commitRecord(SourceRecord)method was removed fromSourceTask.

- The

- Consumer

- The

poll(long)method was removed from the consumer. Please usepoll(Duration)instead. Note that there is a difference in behavior between the two methods. Thepoll(Duration)method does not block beyond the timeout awaiting partition assignment, whereas the earlierpoll(long)method used to wait beyond the timeout. - The

committed(TopicPartition)andcommitted(TopicPartition, Duration)methods were removed from the consumer. Please usecommitted(Set<TopicPartition;>)andcommitted(Set<TopicPartition;>, Duration)instead. - The

setException(KafkaException)method was removed from theorg.apache.kafka.clients.consumer.MockConsumer. Please usesetPollException(KafkaException)instead.

- The

- Producer

- The

enable.idempotenceconfiguration will no longer automatically fall back when themax.in.flight.requests.per.connectionvalue exceeds 5. - The deprecated

sendOffsetsToTransaction(Map<TopicPartition, OffsetAndMetadata>, String)method has been removed from the Producer API. - The default

linger.mschanged from 0 to 5 in Apache Kafka 4.0 as the efficiency gains from larger batches typically result in similar or lower producer latency despite the increased linger.

- The

- Admin client

- The

alterConfigsmethod was removed from theorg.apache.kafka.clients.admin.Admin. Please useincrementalAlterConfigsinstead. - The

org.apache.kafka.common.ConsumerGroupStateenumeration and related methods have been deprecated. Please useGroupStateinstead which applies to all types of group. - The

Admin.describeConsumerGroupsmethod used to return aConsumerGroupDescriptionin stateDEADif the group ID was not found. In Apache Kafka 4.0, theGroupIdNotFoundExceptionis thrown instead as part of the support for new types of group. - The

org.apache.kafka.clients.admin.DeleteTopicsResult.values()method was removed. Please useorg.apache.kafka.clients.admin.DeleteTopicsResult.topicNameValues()instead. - The

org.apache.kafka.clients.admin.TopicListing.TopicListing(String, boolean)method was removed. Please useorg.apache.kafka.clients.admin.TopicListing.TopicListing(String, Uuid, boolean)instead. - The

org.apache.kafka.clients.admin.ListConsumerGroupOffsetsOptions.topicPartitions(List<TopicPartition>)method was removed. Please useorg.apache.kafka.clients.admin.Admin.listConsumerGroupOffsets(Map<String, ListConsumerGroupOffsetsSpec>, ListConsumerGroupOffsetsOptions)instead. - The deprecated

dryRunmethods were removed from theorg.apache.kafka.clients.admin.UpdateFeaturesOptions. Please usevalidateOnlyinstead. - The constructor

org.apache.kafka.clients.admin.FeatureUpdatewith short and boolean arguments was removed. Please use the constructor that accepts short and the specified UpgradeType enum instead. - The

allowDowngrademethod was removed from theorg.apache.kafka.clients.admin.FeatureUpdate. - The

org.apache.kafka.clients.admin.DescribeTopicsResult.DescribeTopicsResult(Map<String, KafkaFuture<TopicDescription>>)method was removed. Please useorg.apache.kafka.clients.admin.DescribeTopicsResult.DescribeTopicsResult(Map<Uuid, KafkaFuture<TopicDescription>>, Map<String, KafkaFuture<TopicDescription>>)instead. - The

values()method was removed from theorg.apache.kafka.clients.admin.DescribeTopicsResult. Please usetopicNameValues()instead. - The

all()method was removed from theorg.apache.kafka.clients.admin.DescribeTopicsResult. Please useallTopicNames()instead.

- The

- Kafka Streams

- All public API, deprecated in Apache Kafka 3.6 or an earlier release, have been removed, with the exception of

JoinWindows.of()andJoinWindows#grace(). See KAFKA-17531 for details. - The most important changes are highlighted in the Kafka Streams upgrade guide.

- For a full list of changes, see KAFKA-12822.

- If you are using

KStream#transformValues()which was removed with Apache Kafka 4.0.0 release, and you need to rewrite your program to useKStreams#processValues()instead, pay close attention to the migration guide.

- All public API, deprecated in Apache Kafka 3.6 or an earlier release, have been removed, with the exception of

- Common

- Other changes:

- The minimum Java version required by clients and Kafka Streams applications has been increased from Java 8 to Java 11 while brokers, connect and tools now require Java 17. See KIP-750 and KIP-1013 for more details.

- Java 23 support has been added in Apache Kafka 4.0

- Scala 2.12 support has been removed in Apache Kafka 4.0 See KIP-751 for more details

- Logging framework has been migrated from Log4j to Log4j2. Users can use the log4j-transform-cli tool to automatically convert their existing Log4j configuration files to Log4j2 format. See log4j-transform-cli for more details. Log4j2 provides limited compatibility for Log4j configurations. See Use Log4j 1 to Log4j 2 bridge for more information,

- KafkaLog4jAppender has been removed, users should migrate to the log4j2 appender See KafkaAppender for more details

- The

--delete-configoption in thekafka-topicscommand line tool has been deprecated. - For implementors of RemoteLogMetadataManager (RLMM), a new API

nextSegmentWithTxnIndexis introduced in RLMM to allow the implementation to return the next segment metadata with a transaction index. This API is used when the consumers are enabled with isolation level as READ_COMMITTED. See KIP-1058 for more details. - The criteria for identifying internal topics in ReplicationPolicy and DefaultReplicationPolicy have been updated to enable the replication of topics that appear to be internal but aren’t truly internal to Kafka and Mirror Maker 2. See KIP-1074 for more details.

- KIP-714 is now enabled for Kafka Streams via KIP-1076. This allows to not only collect the metric of the internally used clients of a Kafka Streams appliction via a broker-side plugin, but also to collect the metrics of the Kafka Streams runtime itself.

- The default value of ’num.recovery.threads.per.data.dir’ has been changed from 1 to 2. The impact of this is faster recovery post unclean shutdown at the expense of extra IO cycles. See KIP-1030

- The default value of ‘message.timestamp.after.max.ms’ has been changed from Long.Max to 1 hour. The impact of this messages with a timestamp of more than 1 hour in the future will be rejected when message.timestamp.type=CreateTime is set. See KIP-1030

- Introduced in KIP-890, the

TransactionAbortableExceptionenhances error handling within transactional operations by clearly indicating scenarios where transactions should be aborted due to errors. It is important for applications to properly manage bothTimeoutExceptionandTransactionAbortableExceptionwhen working with transaction producers.- TimeoutException: This exception indicates that a transactional operation has timed out. Given the risk of message duplication that can arise from retrying operations after a timeout (potentially violating exactly-once semantics), applications should treat timeouts as reasons to abort the ongoing transaction.

- TransactionAbortableException: Specifically introduced to signal errors that should lead to transaction abortion, ensuring this exception is properly handled is critical for maintaining the integrity of transactional processing.

- To ensure seamless operation and compatibility with future Kafka versions, developers are encouraged to update their error-handling logic to treat both exceptions as triggers for aborting transactions. This approach is pivotal for preserving exactly-once semantics.

- See KIP-890 and KIP-1050 for more details

Upgrading to 3.9.0 and older versions

See Upgrading From Previous Versions in the 3.9 documentation.

6 - KRaft vs ZooKeeper

Differences Between KRaft mode and ZooKeeper mode

Removed ZooKeeper Features

This section documents differences in behavior between KRaft mode and ZooKeeper mode. Specifically, several configurations, metrics and features have changed or are no longer required in KRaft mode. To migrate an existing cluster from ZooKeeper mode to KRaft mode, please refer to the ZooKeeper to KRaft Migration section.

Configurations

Removed password encoder-related configurations. These configurations were used in ZooKeeper mode to define the key and backup key for encrypting sensitive data (e.g., passwords), specify the algorithm and key generation method for password encryption (e.g., AES, RSA), and control the key length and encryption strength.

password.encoder.secretpassword.encoder.old.secretpassword.encoder.keyfactory.algorithmpassword.encoder.cipher.algorithmpassword.encoder.key.lengthpassword.encoder.iterations

In KRaft mode, Kafka stores sensitive data in records, and the data is not encrypted in Kafka.

- Removed

control.plane.listener.name. Kafka relies on ZooKeeper to manage metadata, but some internal operations (e.g., communication between controllers (a.k.a., broker controller) and brokers) still require Kafka’s internal control plane for coordination.

In KRaft mode, Kafka eliminates its dependency on ZooKeeper, and the control plane functionality is fully integrated into Kafka itself. The process roles are clearly separated: brokers handle data-related requests, while the controllers (a.k.a., quorum controller) manages metadata-related requests. The controllers use the Raft protocol for internal communication, which operates differently from the ZooKeeper model. Use the following parameters to configure the control plane listener:

* `controller.listener.names`

* `listeners`

* `listener.security.protocol.map`

Removed graceful broker shutdowns-related configurations. These configurations were used in ZooKeeper mode to define the maximum number of retries and the retry backoff time for controlled shutdowns. It can reduce the risk of unplanned leader changes and data inconsistencies.

controlled.shutdown.max.retriescontrolled.shutdown.retry.backoff.ms

In KRaft mode, Kafka uses the Raft protocol to manage metadata. The broker shutdown process differs from ZooKeeper mode as it is managed by the quorum-based controller. The shutdown process is more reliable and efficient due to automated leader transfers and metadata updates handled by the controller.

Removed the broker id generation-related configurations. These configurations were used in ZooKeeper mode to specify the broker id auto generation and control the broker id generation process.

reserved.broker.max.idbroker.id.generation.enable

Kafka uses the node id in KRaft mode to identify servers.

* `node.id`

Removed broker protocol version-related configurations. These configurations were used in ZooKeeper mode to define communication protocol version between brokers. In KRaft mode, Kafka uses

metadata.versionto control the feature level of the cluster, which can be managed usingbin/kafka-features.sh.inter.broker.protocol.version

Removed dynamic configurations which relied on ZooKeeper. In KRaft mode, to change these configurations, you need to restart the broker/controller.

advertised.listeners

Removed the leader imbalance configuration used only in ZooKeeper.

leader.imbalance.per.broker.percentagewas used to limit the preferred leader election frequency in ZooKeeper.leader.imbalance.per.broker.percentage

Removed ZooKeeper related configurations.

zookeeper.connectzookeeper.session.timeout.mszookeeper.connection.timeout.mszookeeper.set.aclzookeeper.max.in.flight.requestszookeeper.ssl.client.enablezookeeper.clientCnxnSocketzookeeper.ssl.keystore.locationzookeeper.ssl.keystore.passwordzookeeper.ssl.keystore.typezookeeper.ssl.truststore.locationzookeeper.ssl.truststore.passwordzookeeper.ssl.truststore.typezookeeper.ssl.protocolzookeeper.ssl.enabled.protocolszookeeper.ssl.cipher.suiteszookeeper.ssl.endpoint.identification.algorithmzookeeper.ssl.crl.enablezookeeper.ssl.ocsp.enable

Dynamic Log Levels

The dynamic log levels feature allows you to change the log4j settings of a running broker or controller process without restarting it. The command-line syntax for setting dynamic log levels on brokers has not changed in KRaft mode. Here is an example of setting the log level on a broker:

./bin/kafka-configs.sh --bootstrap-server localhost:9092 \ --entity-type broker-loggers \ --entity-name 1 \ --alter \ --add-config org.apache.kafka.raft.KafkaNetworkChannel=TRACEWhen setting dynamic log levels on the controllers, the

--bootstrap-controllerflag must be used. Here is an example of setting the log level ona controller:./bin/kafka-configs.sh --bootstrap-controller localhost:9093 \ --entity-type broker-loggers \ --entity-name 1 \ --alter \ --add-config org.apache.kafka.raft.KafkaNetworkChannel=TRACE

Note that the entity-type must be specified as broker-loggers, even though we are changing a controller’s log level rather than a broker’s log level.

- When changing the log level of a combined node, which has both broker and controller roles, either –bootstrap-servers or –bootstrap-controllers may be used. Combined nodes have only a single set of log levels; there are not different log levels for the broker and controller parts of the process.

Dynamic Controller Configurations

Some Kafka configurations can be changed dynamically, without restarting the process. The command-line syntax for setting dynamic log levels on brokers has not changed in KRaft mode. Here is an example of setting the number of IO threads on a broker:

./bin/kafka-configs.sh --bootstrap-server localhost:9092 \ --entity-type brokers \ --entity-name 1 \ --alter \ --add-config num.io.threads=5Controllers will apply all applicable cluster-level dynamic configurations. For example, the following command-line will change the

max.connectionssetting on all of the brokers and all of the controllers in the cluster:./bin/kafka-configs.sh --bootstrap-server localhost:9092 \ --entity-type brokers \ --entity-default \ --alter \ --add-config max.connections=10000

It is not currently possible to apply a dynamic configuration on only a single controller.

Metrics

Removed the following metrics related to ZooKeeper.

ControlPlaneNetworkProcessorAvgIdlePercentis to monitor the average fraction of time the network processors are idle. The otherControlPlaneExpiredConnectionsKilledCountis to monitor the total number of connections disconnected, across all processors.ControlPlaneNetworkProcessorAvgIdlePercentControlPlaneExpiredConnectionsKilledCount

In KRaft mode, Kafka also provides metrics to monitor the network processors and expired connections. Use the following metrics to monitor the network processors and expired connections:

* `NetworkProcessorAvgIdlePercent`

* `ExpiredConnectionsKilledCount`

Removed the metrics which are only used in ZooKeeper mode.

kafka.controller:type=ControllerChannelManager,name=QueueSizekafka.controller:type=ControllerChannelManager,name=RequestRateAndQueueTimeMskafka.controller:type=ControllerEventManager,name=EventQueueSizekafka.controller:type=ControllerEventManager,name=EventQueueTimeMskafka.controller:type=ControllerStats,name=AutoLeaderBalanceRateAndTimeMskafka.controller:type=ControllerStats,name=ControlledShutdownRateAndTimeMskafka.controller:type=ControllerStats,name=ControllerChangeRateAndTimeMskafka.controller:type=ControllerStats,name=ControllerShutdownRateAndTimeMskafka.controller:type=ControllerStats,name=IdleRateAndTimeMskafka.controller:type=ControllerStats,name=IsrChangeRateAndTimeMskafka.controller:type=ControllerStats,name=LeaderAndIsrResponseReceivedRateAndTimeMskafka.controller:type=ControllerStats,name=LeaderElectionRateAndTimeMskafka.controller:type=ControllerStats,name=ListPartitionReassignmentRateAndTimeMskafka.controller:type=ControllerStats,name=LogDirChangeRateAndTimeMskafka.controller:type=ControllerStats,name=ManualLeaderBalanceRateAndTimeMskafka.controller:type=KafkaController,name=MigratingZkBrokerCountkafka.controller:type=ControllerStats,name=PartitionReassignmentRateAndTimeMskafka.controller:type=ControllerStats,name=TopicChangeRateAndTimeMskafka.controller:type=ControllerStats,name=TopicDeletionRateAndTimeMskafka.controller:type=KafkaController,name=TopicsIneligibleToDeleteCountkafka.controller:type=ControllerStats,name=TopicUncleanLeaderElectionEnableRateAndTimeMskafka.controller:type=ControllerStats,name=UncleanLeaderElectionEnableRateAndTimeMskafka.controller:type=ControllerStats,name=UncleanLeaderElectionsPerSeckafka.controller:type=ControllerStats,name=UpdateFeaturesRateAndTimeMskafka.controller:type=ControllerStats,name=UpdateMetadataResponseReceivedRateAndTimeMskafka.controller:type=KafkaController,name=ActiveBrokerCountkafka.controller:type=KafkaController,name=ActiveControllerCountkafka.controller:type=KafkaController,name=ControllerStatekafka.controller:type=KafkaController,name=FencedBrokerCountkafka.controller:type=KafkaController,name=GlobalPartitionCountkafka.controller:type=KafkaController,name=GlobalTopicCountkafka.controller:type=KafkaController,name=OfflinePartitionsCountkafka.controller:type=KafkaController,name=PreferredReplicaImbalanceCountkafka.controller:type=KafkaController,name=ReplicasIneligibleToDeleteCountkafka.controller:type=KafkaController,name=ReplicasToDeleteCountkafka.controller:type=KafkaController,name=TopicsToDeleteCountkafka.controller:type=KafkaController,name=ZkMigrationStatekafka.server:type=DelayedOperationPurgatory,name=PurgatorySize,delayedOperation=ElectLeaderkafka.server:type=DelayedOperationPurgatory,name=PurgatorySize,delayedOperation=topickafka.server:type=DelayedOperationPurgatory,name=NumDelayedOperations,delayedOperation=ElectLeaderkafka.server:type=DelayedOperationPurgatory,name=NumDelayedOperations,delayedOperation=topickafka.server:type=SessionExpireListener,name=SessionStatekafka.server:type=SessionExpireListener,name=ZooKeeperAuthFailuresPerSeckafka.server:type=SessionExpireListener,name=ZooKeeperDisconnectsPerSeckafka.server:type=SessionExpireListener,name=ZooKeeperExpiresPerSeckafka.server:type=SessionExpireListener,name=ZooKeeperReadOnlyConnectsPerSeckafka.server:type=SessionExpireListener,name=ZooKeeperSaslAuthenticationsPerSeckafka.server:type=SessionExpireListener,name=ZooKeeperSyncConnectsPerSeckafka.server:type=ZooKeeperClientMetrics,name=ZooKeeperRequestLatencyMs

Behavioral Change Reference

This document catalogs the functional and operational differences between ZooKeeper mode and KRaft mode.

- Configuration Value Size Limitation : KRaft mode restricts configuration values to a maximum size of

Short.MAX_VALUE, which prevents using the append operation to create larger configuration values. - Policy Class Deployment : In KRaft mode, the

CreateTopicPolicyandAlterConfigPolicyplugins run on the controller instead of the broker. This requires users to deploy the policy class JAR files on the controller and configure the parameters (create.topic.policy.class.nameandalter.config.policy.class.name) on the controller.

Note: If migrating from ZooKeeper mode, ensure policy JARs are moved from brokers to controllers.

- Custom implementations of

KafkaPrincipalBuilder: In KRaft mode, custom implementations ofKafkaPrincipalBuildermust also implementKafkaPrincipalSerde; otherwise brokers will not be able to forward requests to the controller.

7 - Compatibility

Compatibility

With the release of Kafka 4.0, significant changes have been introduced that impact compatibility across various components. To assist users in planning upgrades and ensuring seamless interoperability, a comprehensive compatibility matrix has been prepared.

JDK Compatibility Across Kafka Versions

| Module | Kafka Version | Java 11 | Java 17 | Java 23 |

|---|---|---|---|---|

| Clients | 4.0.0 | ✅ | ✅ | ✅ |

| Streams | 4.0.0 | ✅ | ✅ | ✅ |

| Connect | 4.0.0 | ❌ | ✅ | ✅ |

| Server | 4.0.0 | ❌ | ✅ | ✅ |

Note: Java 8 is removed in Kafka 4.0 and is no longer supported.

Server Compatibility

| KRaft Cluster Version | Compatibility 4.0 Server (dynamic voter) | Compatibility 4.0 Server (static voter) |

|---|---|---|

| before 3.2.x | ❌ | ❌ |

| 3.3.x | ❌ | ✅ |

| 3.4.x | ❌ | ✅ |

| 3.5.x | ❌ | ✅ |

| 3.6.x | ❌ | ✅ |

| 3.7.x | ❌ | ✅ |

| 3.8.x | ❌ | ✅ |

| 3.9.x | ✅ | ✅ |

| 4.0.x | ✅ | ✅ |

Note: Can’t upgrade server from static voter to dynamic voter, seeKAFKA-16538.

Client/Broker Forward Compatibility

| Kafka Version | Module | Compatibility with Kafka 4.0 | Key Differences/Limitations |

|---|---|---|---|

| 0.x, 1.x, 2.0 | Client | ❌ Not Compatible | Pre-0.10.x protocols are fully removed in Kafka 4.0 (KIP-896). |

| Streams | ❌ Not Compatible | Pre-0.10.x protocols are fully removed in Kafka 4.0 (KIP-896). | |

| Connect | ❌ Not Compatible | Pre-0.10.x protocols are fully removed in Kafka 4.0 (KIP-896). | |

| 2.1 ~ 2.8 | Client | ⚠️ Partially Compatible | More details in the Consumer, Producer, and Admin Client section. |

| Streams | ⚠️ Limited Compatibility | More details in the Kafka Streams section. | |

| Connect | ⚠️ Limited Compatibility | More details in the Connect section. | |

| 3.x | Client | ✅ Fully Compatible | |

| Streams | ✅ Fully Compatible | ||

| Connect | ✅ Fully Compatible |

Note: Starting with Kafka 4.0, the --zookeeper option in AdminClient commands has been removed. Users must use the --bootstrap-server option to interact with the Kafka cluster. This change aligns with the transition to KRaft mode.

8 - Docker

JVM Based Apache Kafka Docker Image

Docker is a popular container runtime. Docker images for the JVM based Apache Kafka can be found on Docker Hub and are available from version 3.7.0.

Docker image can be pulled from Docker Hub using the following command:

$ docker pull apache/kafka:4.0.0

If you want to fetch the latest version of the Docker image use following command:

$ docker pull apache/kafka:latest

To start the Kafka container using this Docker image with default configs and on default port 9092:

$ docker run -p 9092:9092 apache/kafka:4.0.0

GraalVM Based Native Apache Kafka Docker Image

Docker images for the GraalVM Based Native Apache Kafka can be found on Docker Hub and are available from version 3.8.0.

NOTE: This image is experimental and intended for local development and testing purposes only; it is not recommended for production use.

Docker image can be pulled from Docker Hub using the following command:

$ docker pull apache/kafka-native:4.0.0

If you want to fetch the latest version of the Docker image use following command:

$ docker pull apache/kafka-native:latest

To start the Kafka container using this Docker image with default configs and on default port 9092:

$ docker run -p 9092:9092 apache/kafka-native:4.0.0

Usage guide

Detailed instructions for using the Docker image are mentioned here.